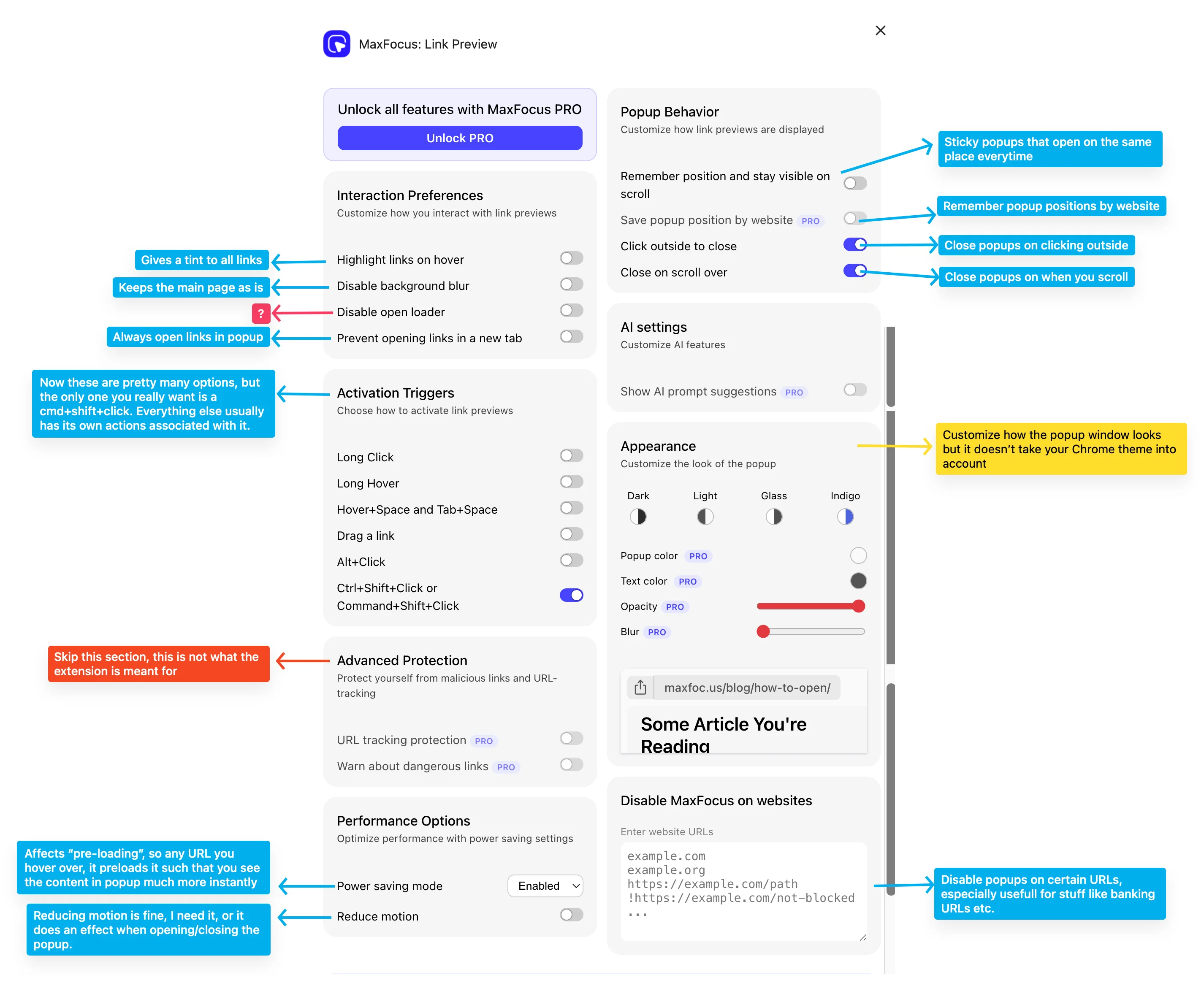

MaxFocus is a new-ish extension focussing on creating link previews on any link you hover on. It works on apps that do not allow for iframe embeds (like Notion), and hence is different than the majority extensions out there. You really do not need the PRO option with this one, unless you really want AI summaries of the page, but I can usually skim the page faster than the AI can generate a good summary of. The looks and feel are customizable and you can make it look just like Arc, which is a feature that I always missed when I switched back to Chrome.

The features setting is a bit messy, but this quick guide should probably help.

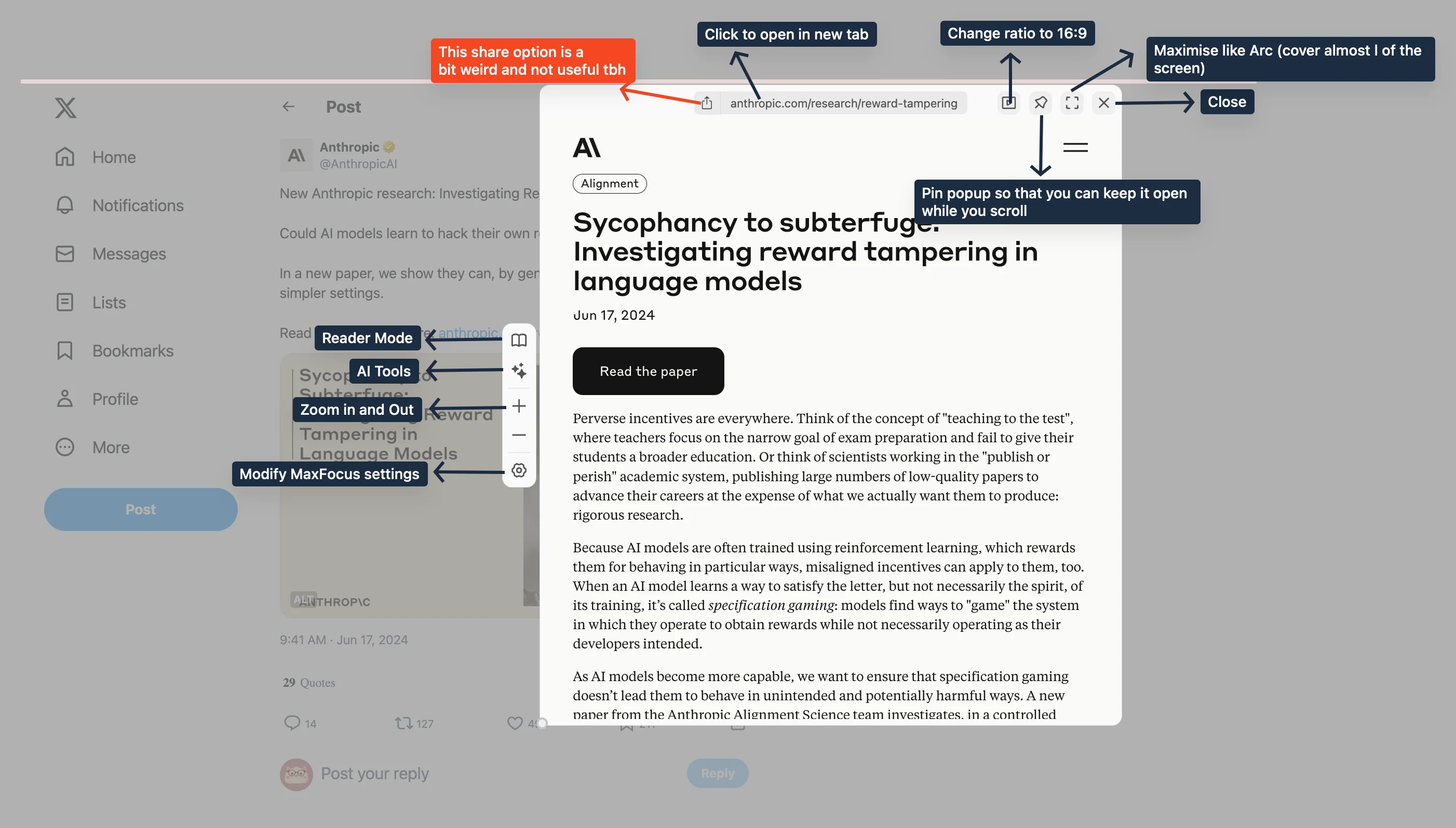

And this is how the popup looks like

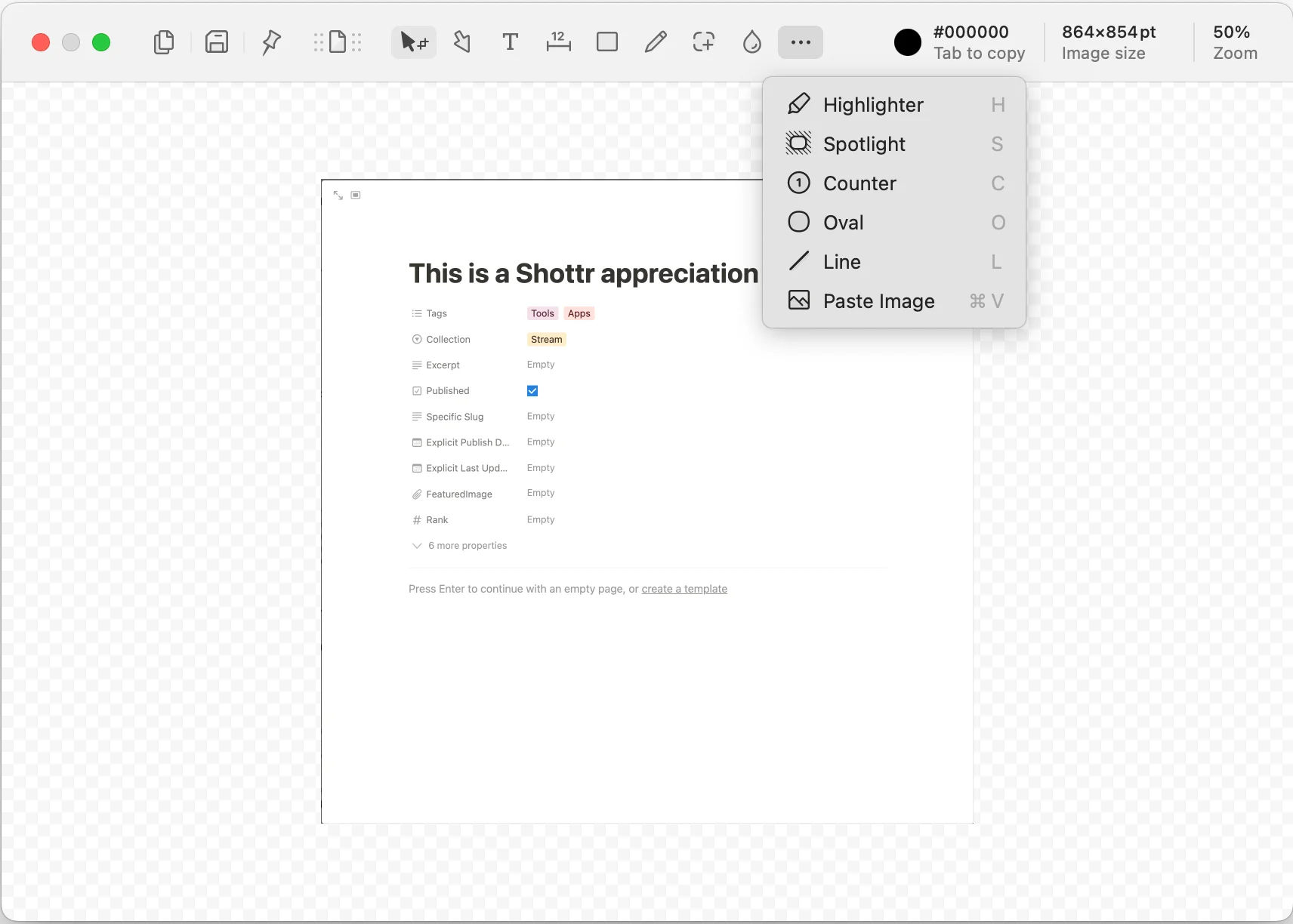

Something you might find frustrating is, you cannot access right click menu inside this popup. When I open articles like these, I often want to save them to reader. But because clicking on the top link opens it in a new tab, and I cannot access right click to use the Save to Reader option, I often end up needing to copy link address and then saving it in Reader. I wish the share option copied or provided MacOS or custom share menu than the QR code it has at the moment.

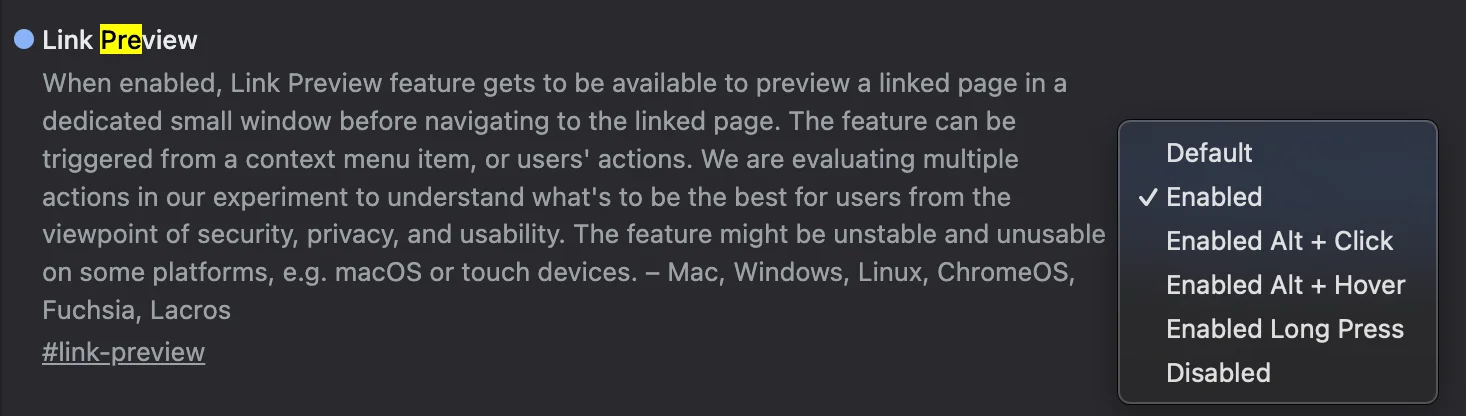

This is what the native Chrome link previews look like. They are basically a headless window, always on bottom right, if you click on them you open the site on a new tab. Not a huge fan at the moment.

![[Image version] And the outcome of the demo video above. Shows 3 entries, 2 of type debit, 1 of credit, across various inferred categories etc.](/_astro/Untitled.DG0K9Q9G_Z11vR9n.webp)